AndrewNG-CV基础

AndrewNG-CV 基础

1 The Basics of Convolutional Neural Networks

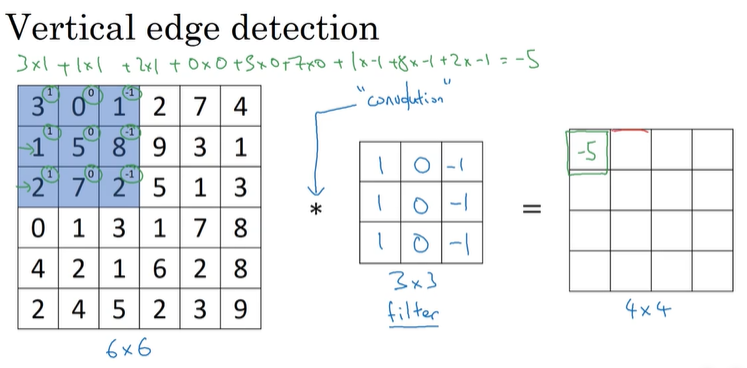

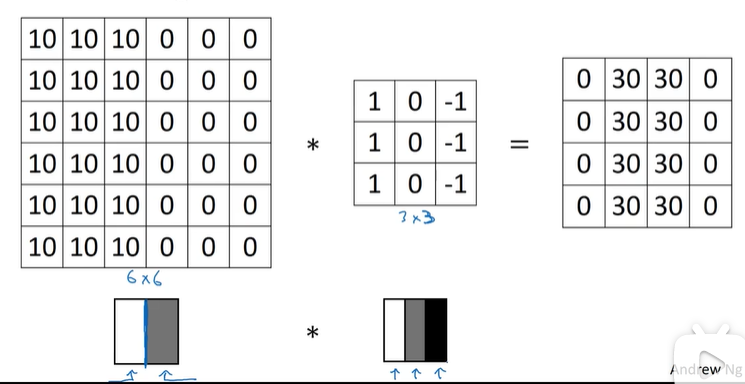

1.1 Edge detection

Use filter to do the convolution operation

One Example

Convolution function in tensorflow: tf.nn.conv2d

Other Examples

- 1->-1: light->dark

- -1->1: dark->light

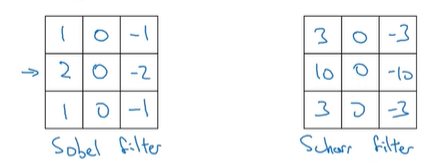

Furthermore, treat the 9 numbers as parameters, and use backward propagation to improve them.

1.2 Padding(填充)

To preserve the information on the edges and corners.

- Valid convolutions:

- No padding

- $n\times n$ * $f\times f$ ——> $(n-f+1)\times(n-f+1)$

- Same convolutions:

- Pad so that output size is the same as the input size.

- $n+2p-f+1=n$ ==> $p=\frac{f-1}{2}$

- f 通常是奇数

1.3 Strided Convolutions

- with $n\times n$ image, $f\times f$ filter, padding p, stride s, the ouput size is:

$$ \bigg\lfloor\frac{n+2p-f}{s}+1\bigg\rfloor\times\bigg\lfloor\frac{n+2p-f}{s}+1\bigg\rfloor $$

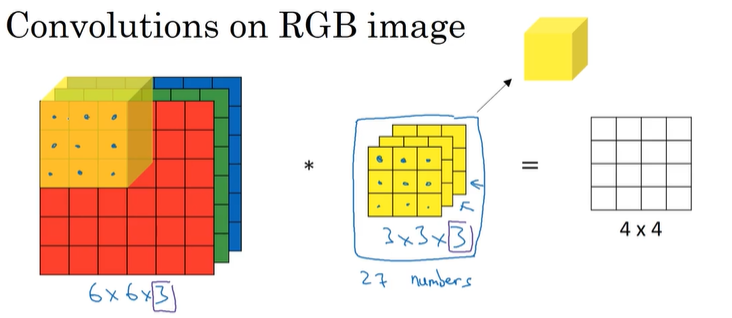

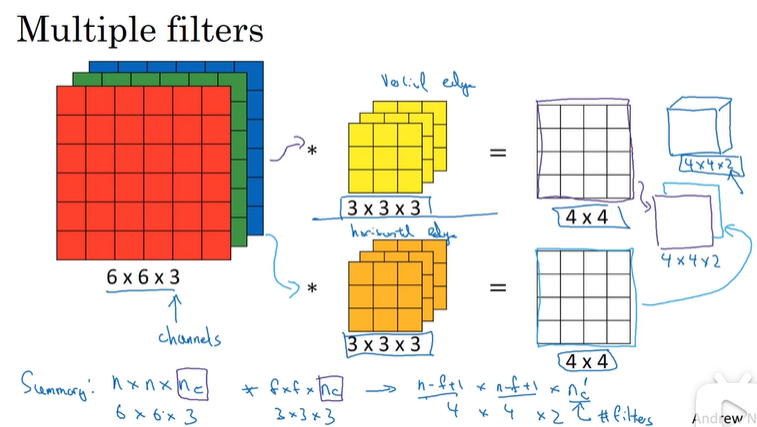

1.4 Convolutions over volumes

channels(depths, 第三个维度)相等

multiple filters:

1.5 One Layer of a CNN

If layer l is a convolution layer:

- $f^{[l]}=$filter size

- $p^{[l]}=$padding

- $s^{[l]}=$stride

- $n_C^{[l]}=$number of filters

- Each filter is: $f^{[l]}\times f^{[l]}\times n_C^{[l-1]}$

- Weights: $f^{[l]}\times f^{[l]}\times n_C^{[l-1]}\times n_C^{[l]}$

- bias: $n_C{[l]}$ — (1,1,1,$n_C{[l]}$)

- Input: $n^{[l-1]}_H\times n^{[l-1]}_W\times n^{[l-1]}_C$

- Output: $a^{[l]}=n^{[l]}_H\times n^{[l]}_W\times n^{[l]}_C$

$$ n^{[l]}=\bigg\lfloor\frac{n^{[l-1]}+2p^{[l]}-f^{[l]}}{s^{[l]}}+1\bigg\rfloor $$

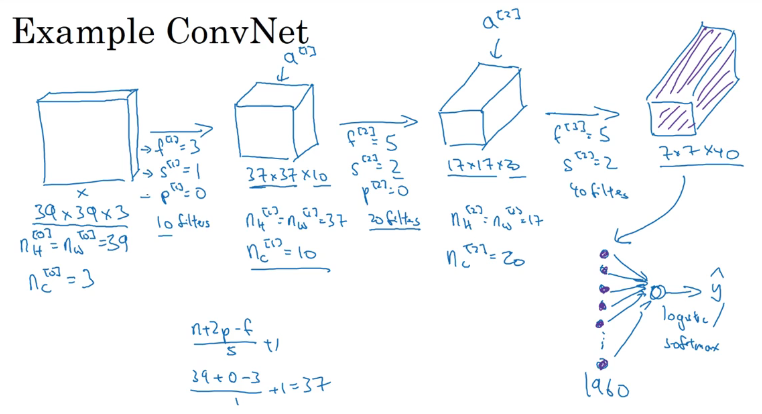

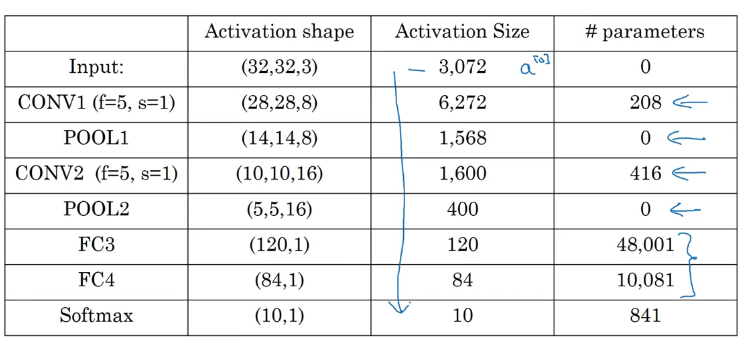

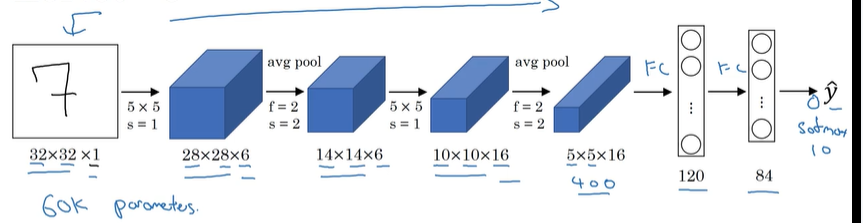

1.6 Example ConvNet

Height and Width stay the same for a while, and gradually trend down as you go deeper in the neural network.

The number of channels gradually increase.

Types of layer in a convolutional network:

- Convolution (CONV)

- Pooling (POOL)

- Fully connected (FC)

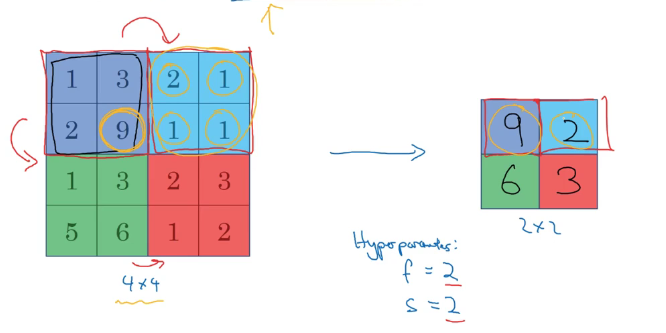

1.7 Pooling layers

- Max pooling

only has hyperparameters(fixed), doesn’t has parameters.

$$

n^{[l]}=\bigg\lfloor\frac{n^{[l-1]}+2p^{[l]}-f^{[l]}}{s^{[l]}}+1\bigg\rfloor

$$

$$

n^{[l]}=\bigg\lfloor\frac{n^{[l-1]}+2p^{[l]}-f^{[l]}}{s^{[l]}}+1\bigg\rfloor

$$

1.8 Neural network example

2 Deep Convolutional Models: Case Study

2.1 Classic Networks

LeNet-5

- trained on grayscale images(灰度图片)

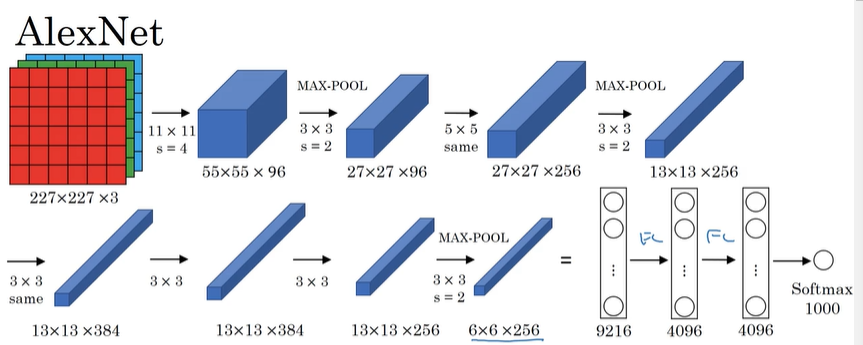

AlexNet

- Similar to LeNet, but much bigger.

- $\approx60M$ parameters.

- Use ReLU

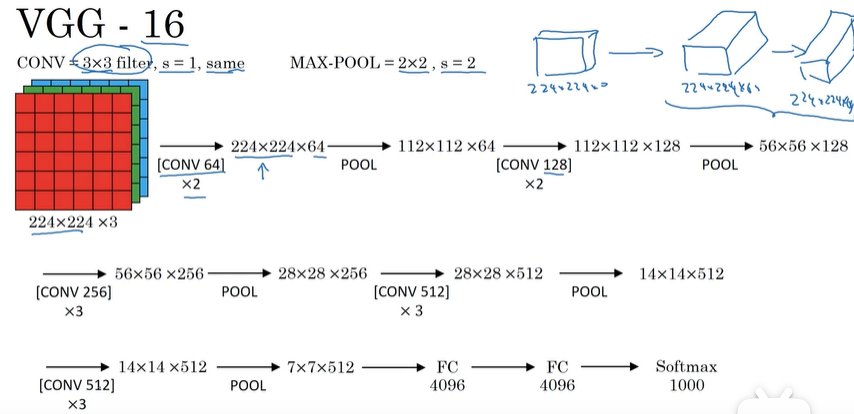

VGG-16

- 共16个layer

- CONV层的卷积核和POOLING层的格式都是固定的

- $\approx138M$ parameters.

- 核VGG-19的表现不相上下

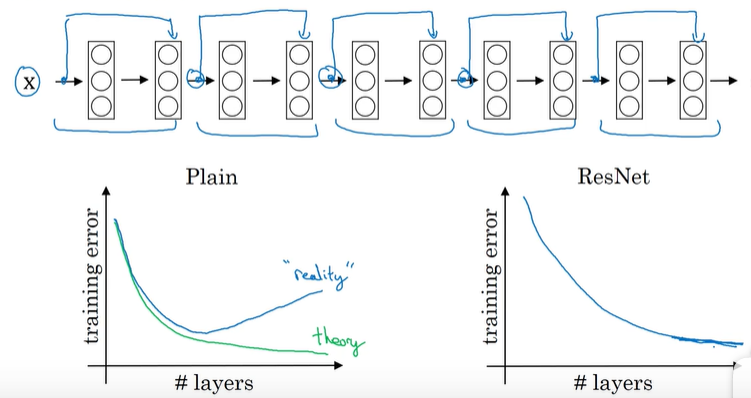

3 Residual Networks (ResNets 残差网络)

Residual block

- Add “short cut” (skip connection)